US12525138 - Simulator and simulation method with increased precision, in particular a weapon system simulator, and weapon system provided with such a simulator

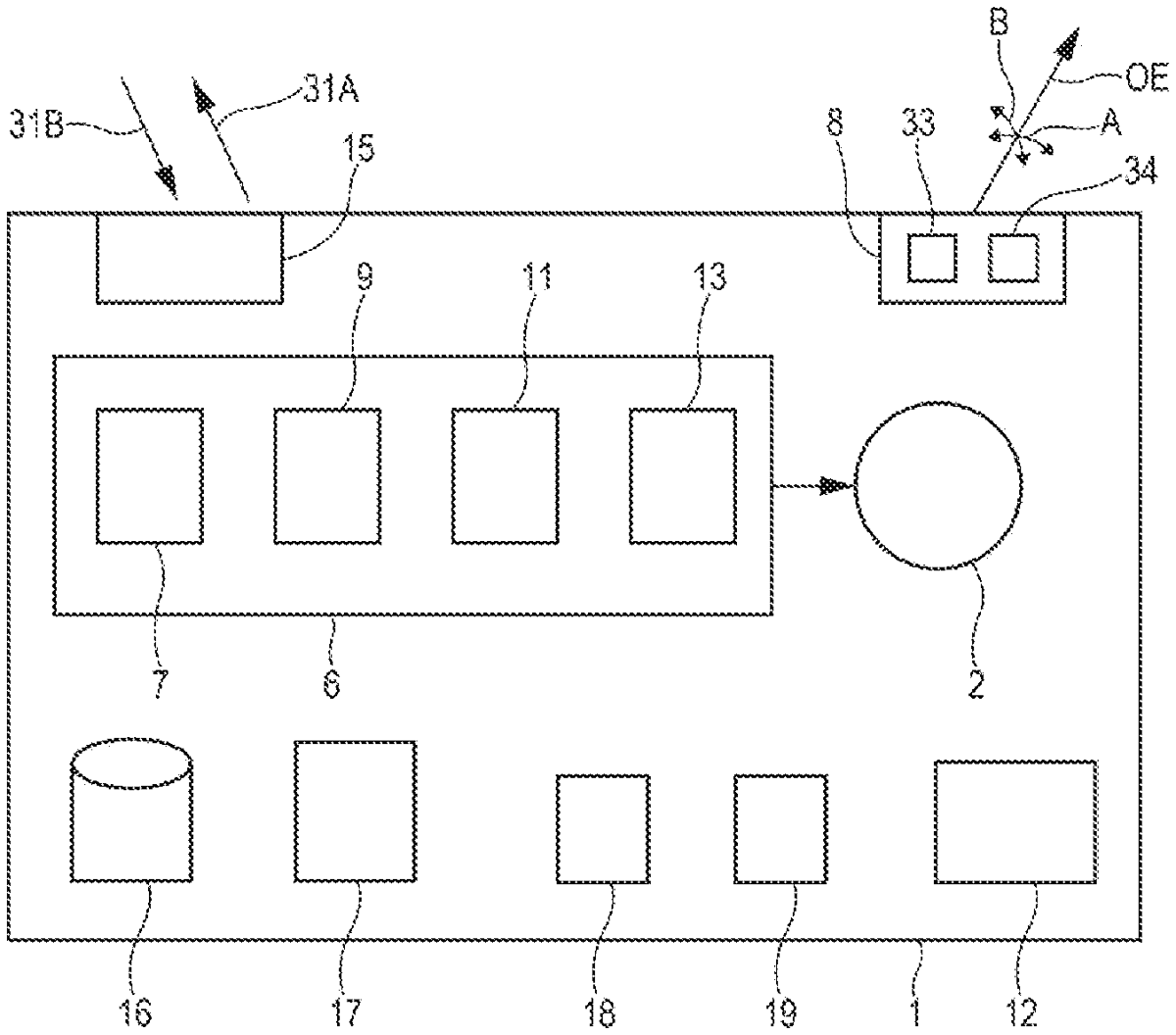

The patent describes a high-precision weapon system simulator that generates a virtual image based on orientation and sighting references, incorporating real-time telemetry from equipped actors to enhance accuracy. It includes a method for comparing the virtual image with a captured image to identify and correct orientation or sighting errors, ultimately displaying an optimized virtual image.

Claim 1

1 . A method for simulating a field of vision of a vision element of a simulator, said vision element displaying a virtual image of a virtual world representative of a real world located in its field of vision, the virtual image comprising one or more virtual actors representative of real actors located in the terrain, said method comprising a series of successive steps comprising a step of generating (E 1 ) the virtual image, the virtual image being defined according to an orientation reference and a sighting reference and being generated from at least data of a terrain database, of the position of the simulator in the terrain and of the position of said real actor or actors in the terrain, the virtual image displayed on the vision element being referred to as current image, characterised in that said sequence of successive steps further comprises: a step (E 2 ) of generating an image referred to as captured image consisting in generating the captured image from measurements of angle-error and telemetry relating to one or more of said real actors, each of which is equipped with a retro-reflector, the measurements of angle-error and of telemetry being carried out by the simulator with the aid of a rangefinder generating telemetry emissions received and sent back by the retro-reflectors equipping the real actors; a comparison step (E 3 ) consisting in comparing the current virtual image and the captured image so as to determine at least one error value representative of an orientation and/or sighting error; and a resetting step (E 4 ) consisting in resetting the orientation reference and/or the sighting reference of the virtual image to correct said error value so as to create an optimised virtual image, this optimised virtual image then being displayed on the vision element instead of the current virtual image. said method comprising a series of successive steps comprising a step of generating (E 1 ) the virtual image, the virtual image being defined according to an orientation reference and a sighting reference and being generated from at least data of a terrain database, of the position of the simulator in the terrain and of the position of said real actor or actors in the terrain, the virtual image displayed on the vision element being referred to as current image, characterised in that said sequence of successive steps further comprises: a step (E 2 ) of generating an image referred to as captured image consisting in generating the captured image from measurements of angle-error and telemetry relating to one or more of said real actors, each of which is equipped with a retro-reflector, the measurements of angle-error and of telemetry being carried out by the simulator with the aid of a rangefinder generating telemetry emissions received and sent back by the retro-reflectors equipping the real actors; a comparison step (E 3 ) consisting in comparing the current virtual image and the captured image so as to determine at least one error value representative of an orientation and/or sighting error; and a resetting step (E 4 ) consisting in resetting the orientation reference and/or the sighting reference of the virtual image to correct said error value so as to create an optimised virtual image, this optimised virtual image then being displayed on the vision element instead of the current virtual image. a step (E 2 ) of generating an image referred to as captured image consisting in generating the captured image from measurements of angle-error and telemetry relating to one or more of said real actors, each of which is equipped with a retro-reflector, the measurements of angle-error and of telemetry being carried out by the simulator with the aid of a rangefinder generating telemetry emissions received and sent back by the retro-reflectors equipping the real actors; a comparison step (E 3 ) consisting in comparing the current virtual image and the captured image so as to determine at least one error value representative of an orientation and/or sighting error; and a resetting step (E 4 ) consisting in resetting the orientation reference and/or the sighting reference of the virtual image to correct said error value so as to create an optimised virtual image, this optimised virtual image then being displayed on the vision element instead of the current virtual image.

Google Patents

https://patents.google.com/patent/US12525138

USPTO PDF

https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/12525138